Concepts in Probability

September 3, 2025

Basic Concepts of Probability

Intro to Probability

People often colloquially refer to probability…

- “What are the chances the Red Sox will win this weekend?”

- “What’s the chance of rain tomorrow?”

- “What is the chance that a patient responds to a new therapy?”

Formalizing concepts and terminology around probability theory is essential for better understanding probability (and statistics).

Random Experiments

A random experiment is an action or process that leads to one of several possible outcomes.

- For example, flipping a coin leads to two possible outcomes: either heads or tails.

The probability of an outcome is the proportion of times that the outcome would occur if the random phenomenon could be observed an infinite number of times.

- If a fair coin is flipped an infinite number of times, heads would be obtained 50% of the time.

Outcomes and events

An outcome in a study is the result observable once the experiment has been conducted.

- The sum of the faces on two dice that have been rolled.

- The response of a patient treated with an experimental therapy.

An event is a collection of outcomes.

- The sum after rolling two dice is 7.

- 22 of 30 patients in a study have a good response to a therapy.

Events can be referred to by letters. For example, if

Disjoint (Mutually Exclusive) Events

Two events or outcomes are called disjoint or mutually exclusive if they cannot both happen at the same time.

Here,

- The probability of rolling a 1, 2, 4, or 6 on a six-sided die is 4/6.

Addition Rule for Disjoint Events

If

If there are

General Addition Rule

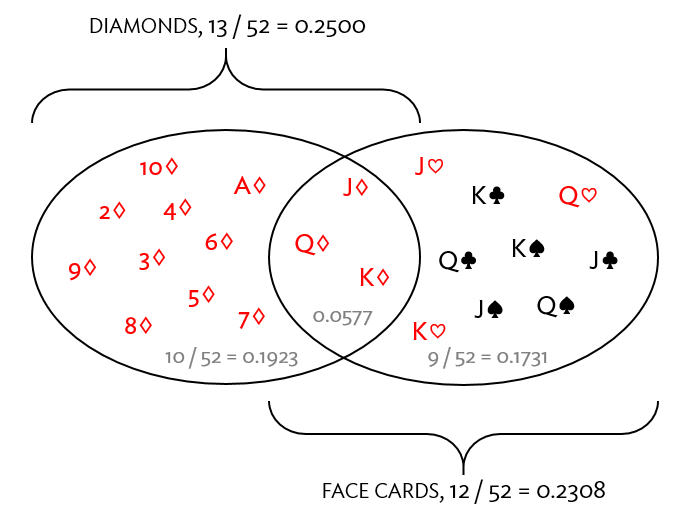

Suppose that we are interested in the probability of drawing a diamond or a face card out of a standard 52-card deck.

Does

General Addition Rule…

No, we need to correct the double counting of the three cards that are in both events, subtract the probability that both events occur…

Thus, for any two events

Sample Space

A sample space is an exhaustive list of mutually exclusive outcomes.

Suppose the possible

Given a sample space

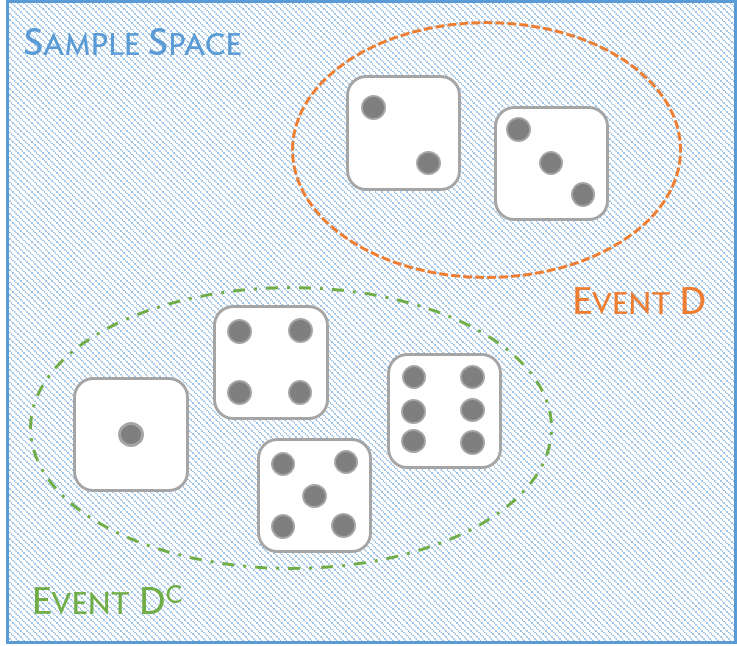

Complement of an Event

Let

The complement of

Complement of an Event…

The complement of an event

An event and its complement are mathematically related:

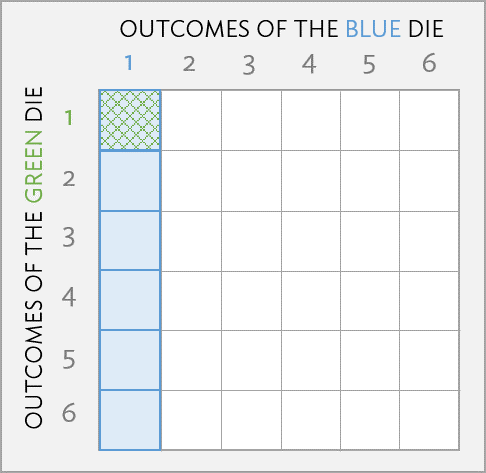

Independent Events

Two events

A blue die and a green die are rolled. What is the probability of rolling two 1’s?

Conditional Probability

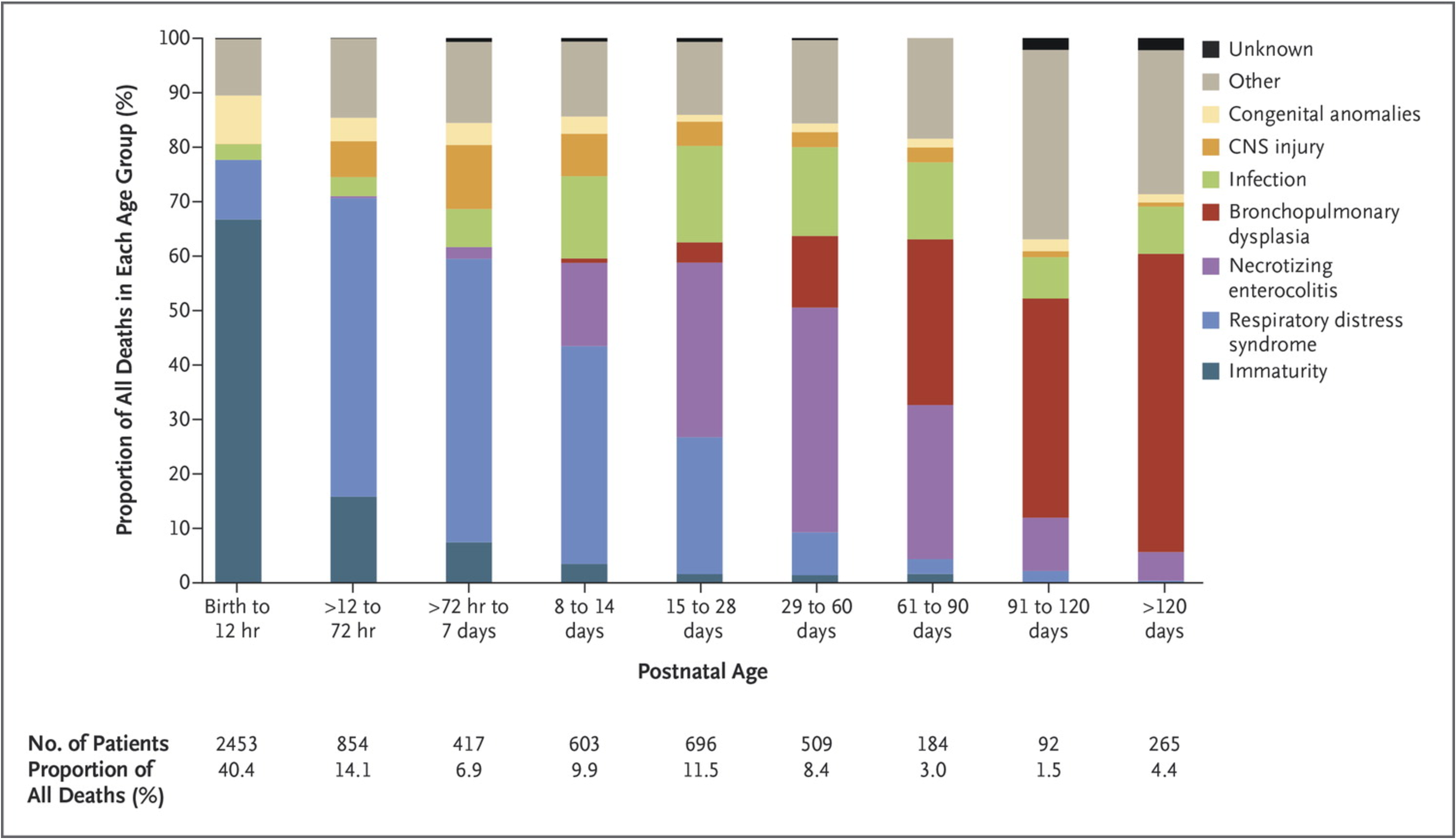

An example from childhood mortality

Published in Patel, et al., NEJM (2015) Vol 372, pp 331 - 340.

Conditional Probability: Intuition

Consider height in the US population.

What is the probability that a randomly selected individual in the population is taller than 6 feet, 4 inches?

- Suppose you learn that the selected individual is a professional basketball player.

- Does this change the probability that the individual is taller than 6 feet, 4 inches? Yes or no, and why?

Conditional Probability: Concept

The conditional probability of an event

Toss a fair coin three times. Let

- Conditioning on

- In this restricted set of outcomes,

Conditional Probability: Formal Definition

As long as

From the definition,

Independence, Again…

A consequence of the definition of conditional probability:

- If

Thus, independence means that conditioning has no effect since the two event spaces do not overlap.

General Multiplication Rule

If

Rearranging the definition of conditional probability yields this

Unlike the previously mentioned multiplication rule, this is valid for events that might not be independent.

Positive Predictive Value and Bayes’ Theorem

Pre-natal Testing for Trisomy 21, 13, and 18

Some congenital disorders are caused by an additional copy of a chromosome being attached to another in reproduction.

- Trisomy 21: Down syndrome, approximately 1 in 800 births

- Trisomy 13: Patau’s syndrome, physical and mental disabilities, approximately 1 in 16,000 newborns

- Trisomy 18: Edward’s syndrome, nearly always fatal, either in stillbirth or infant mortality, occurs in about 1 in 6,000 births

Cell-free fetal DNA (cfDNA), copies of embryo DNA present in maternal blood, can be used as a non-invasive test.

Cell-free Fetal DNA-based Testing

Initial testing of the technology was done using archived samples of genetic material in children whose trisomy status was known.

The results are variable, but generally very good:

- Of 1000 unborn children with one of the disorders, about 980 have cfDNA that tests positive. The test has high sensitivity (true positive rate).

- Of 1000 unborn children without the disorders, about 995 test negative. The test has high specificity (true negative rate).

Cell-free Fetal DNA-based Testing…

The designers of a diagnostic test strive for accuracy: A test should have high sensitivity and specificity.

A family with an unborn child undergoing testing wants to know the likelihood of the condition being present if the test is positive.

Suppose a child has tested positive for trisomy 21. What is the probability the child does have trisomy 21, given the positive test result?

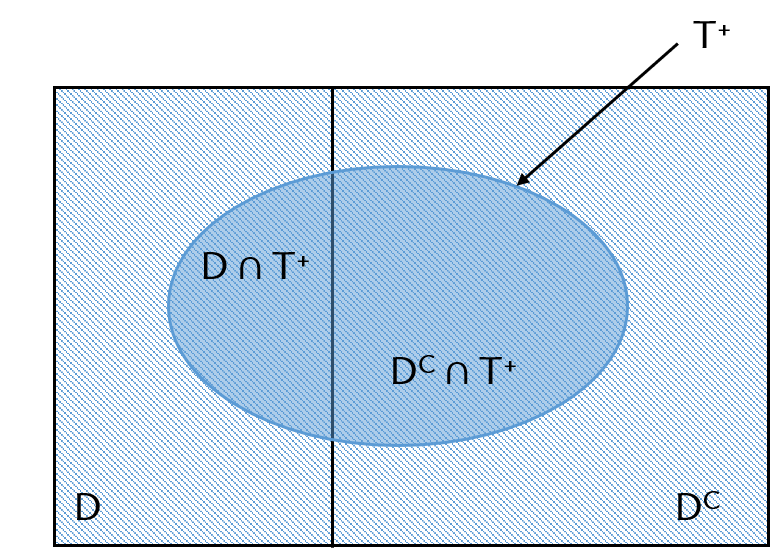

Defining Events in Diagnostic Testing

Events of interest in diagnostic testing:

Could use

Characteristics of a Diagnostic Test

The following measures are all characteristics of a diagnostic test.

- Sensitivity =

- Specificity =

- False negative rate =

- Note that

- Note that

- False positive rate =

- Note that

- Note that

Positive Predictive Value of a Test

Suppose an individual tests positive for a disease.

The positive predictive value (PPV) of a diagnostic test is the probability that the disease is present, given the test returns a positive results: PPV =

The characteristics of a diagnostic test include

Bayes’ Theorem (or Bayes’ Rule)

Bayes’ Theorem (simplest form):

Follows directly from the definition of conditional probability, noting that

The Denominator

Bayes’ Theorem is usually stated differently, since, in many problems,

Suppose

Bayes’ Theorem can be written as:

Bayes’ Theorem for Diagnostic Tests

Bayes’ Theorem for Diagnostic Tests…

HST 190: Introduction to Biostatistics