Introduction to Linear Regression

September 3, 2025

Regression

Regression methods examine the association between a response variable and a set of possible predictor variables (covariates).

- Linear regression posits an approximately linear relationship between the response and predictor variables.

- The response variable

- A simple linear regression model takes the form:

Simple linear regression quantifies how the mean of a response variable

Multiple regression

Multiple linear regression evaluates the relationship, assuming linearity, between the mean of a response variable,

This is conceptually similar to the simpler case of evaluating

Linearity is an approximation; so, think of regression as projection onto a simpler worldview (“if the phenomenon were linear…”).

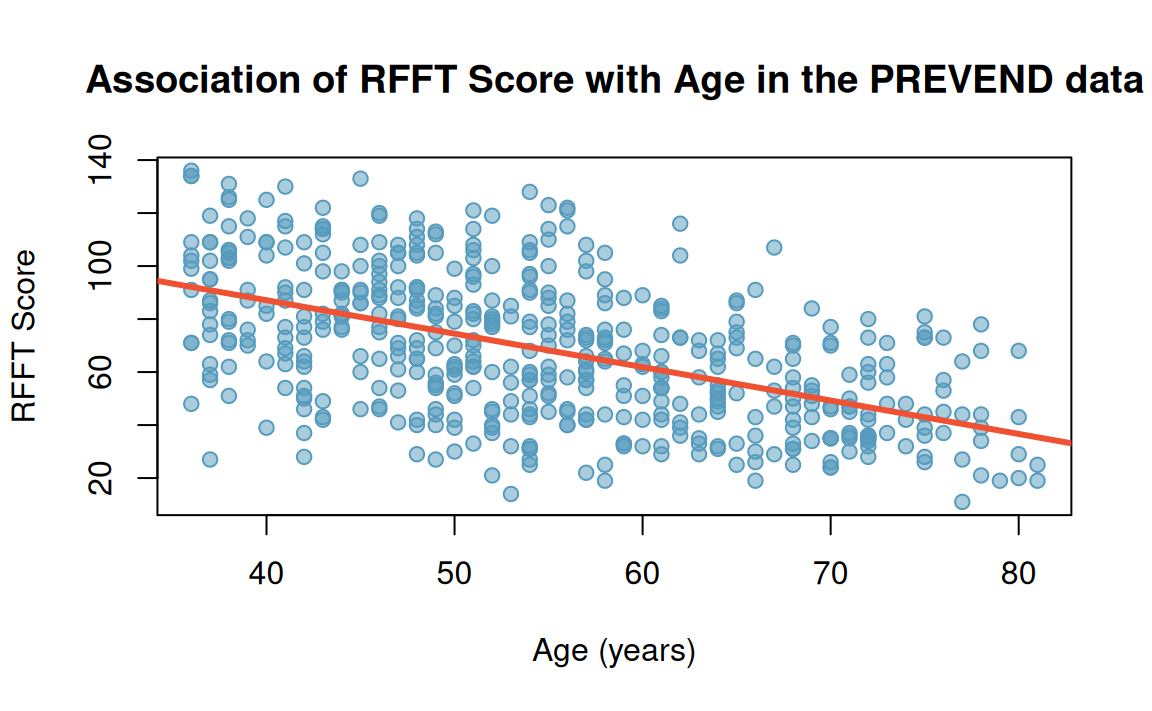

Back to the PREVEND study

As adults age, cognitive function changes over time, largely due to cerebrovascular and neurodegenerative changes. PREVEND study measured clinical, demographic data for participants, 1997-2006.

- Data from 4,095 participants appear the

prevenddataset in theoibiostatRpackage. - Ruff Figural Fluency Test (RFFT) to assess cognitive function (planning and the ability to switch between different tasks).

Assumptions for linear regression

A few assumptions for justifying the use of linear regression to describe how the mean of

- Independent observations:

- Linearity:

- Constant variability (homoscedasticity): variability of response

- Approximate normality of residuals:

What happens when these assumptions do not hold? 🤔

Linear regression via ordinary least squares

Distance between an observed point

For

The least squares regression line minimizes the sum of squared residuals1

The mean squared error (MSE), a metric of prediction quality, is also based on the residuals

Coefficients in least squares linear regression

Linear regression: The population view

For a population of ordered pairs

Since

So, the regression line is a statement about averages: What do we expect the mean of

Linear regression: Checking assumptions?

Assumptions of linear regression are independence of study units, linearity (in parameters), constant variability, normality of residuals.

- Independence should be enforced by well-considered study design.

- Other assumptions may be checked empirically…but should we?

- Residual plots: scatterplots in which predicted values are on the

- Normal probability plots: theoretical quantiles for a normal versus observed quantiles (of residuals)

- Residual plots: scatterplots in which predicted values are on the

Linear regression with categorical predictors

Although the response variable in linear regression is necessarily numerical, the predictor may be either numerical or categorical.

Simple linear regression only accommodates categorical predictor variables with two levels1.

Simple linear regression with a two-level categorical predictor

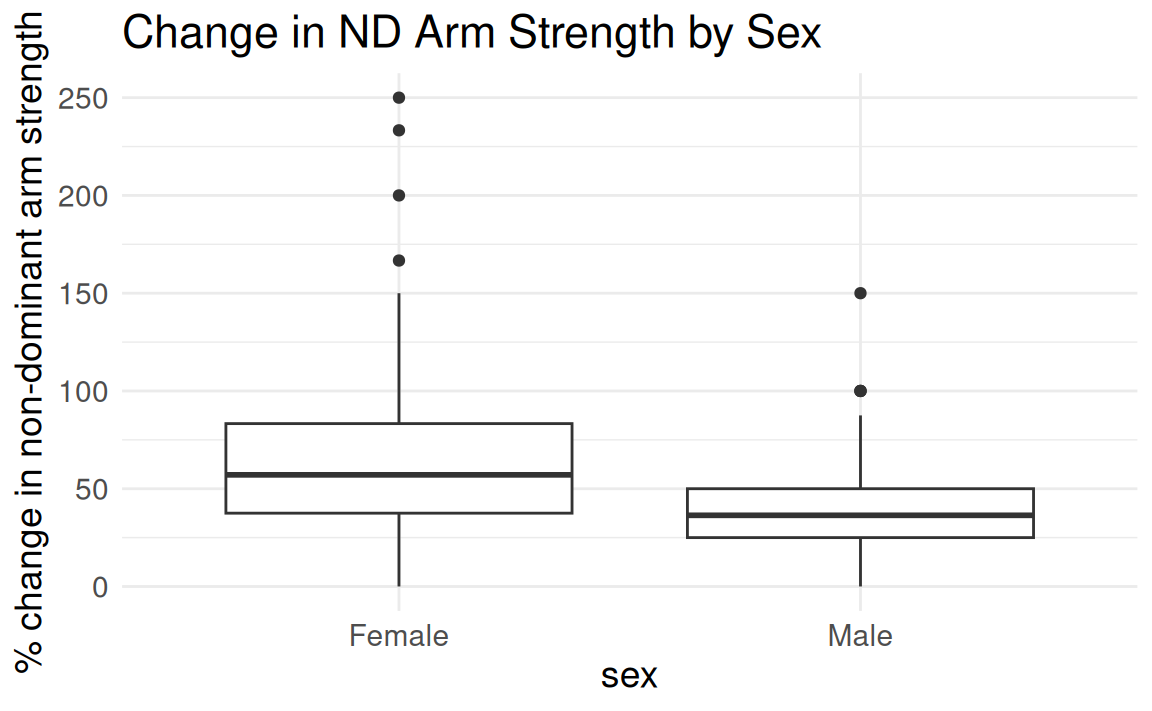

Back to FAMuSS: Comparing ndrm.ch by sex

Let’s re-examine the association between change in non-dominant arm strength after resistance training and sex in the FAMuSS data.

Female Male

62.92720 39.23512 (Intercept) famuss$sexMale

62.92720 -23.69207 - Intercept

- Slope

Strength of a regression fit: Using

- Correlation coefficient

- If a linear regression fit perfectly captured the variability in the observed data, then

- The variability of the residuals about the regression line represents the variability remaining after the fit;

Statistical inference in regression

Assume observed data

Under this assumption, the slope

Goal: Inference for the slope

Hypothesis testing in regression

The null hypothesis

Use the

Confidence intervals in regression

A

Example: Linear Regression in an RCT

Consider a randomized controlled trial (RCT) that recruits

| L1 | L2 | X | Y |

|---|---|---|---|

| 1 | 1 | 0 | 1.39 |

| 0 | 0 | 0 | -0.18 |

| 0 | 0 | 0 | -0.20 |

| 1 | 1 | 1 | 3.61 |

The treatment

Statistical power and sample size

Question: A collaborator approaches you about this hypothetical RCT, wondering whether

In terms of hypothesis testing:

The power of a statistical test is the probability that the test (correctly) rejects the null hypothesis

- the hypothesized effect size (

- the variance of each of the two groups (i.e.,

- the sample sizes of each of the two group (

Outcomes and errors in testing

| Result of test | ||

|---|---|---|

| State of nature | Reject |

Fail to reject |

| Type I error, |

No error, |

|

| No error, |

Type II error, |

Choosing the right sample size

Study design includes calculating a study size (sample size) such that probability of rejecting

It is important to have a precise estimate of an appropriate study size, since

- a study needs to be large enough to allow for sufficient power to detect a difference between groups when one exists, but

- not so unnecessarily large that it is cost-prohibitive or unethical.

Often, simulation is a quick and feasible way to conduct a power analysis.

Multiple regression

In most practical settings, more than one explanatory variable is likely to be associated with a response.

Multiple linear regression evaluates the relationship between a response

Multiple linear regression takes the form

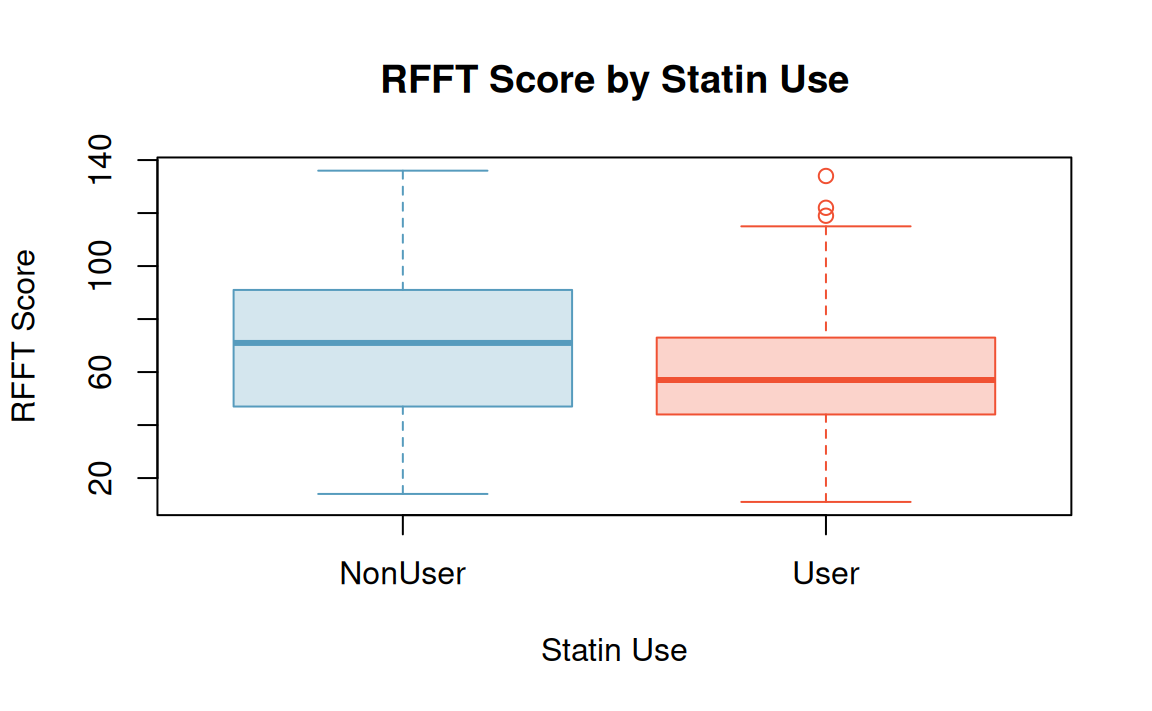

PREVEND: Statin use and cognitive function

The PREVEND study collected data on statin use and demographic factors.

- Statins are a class of drugs widely used to lower cholesterol.

- Recent guidelines for prescribing statins suggest statin use for almost half of Americans 40-75 years old, as well as nearly all men over 60.

- A few small (low

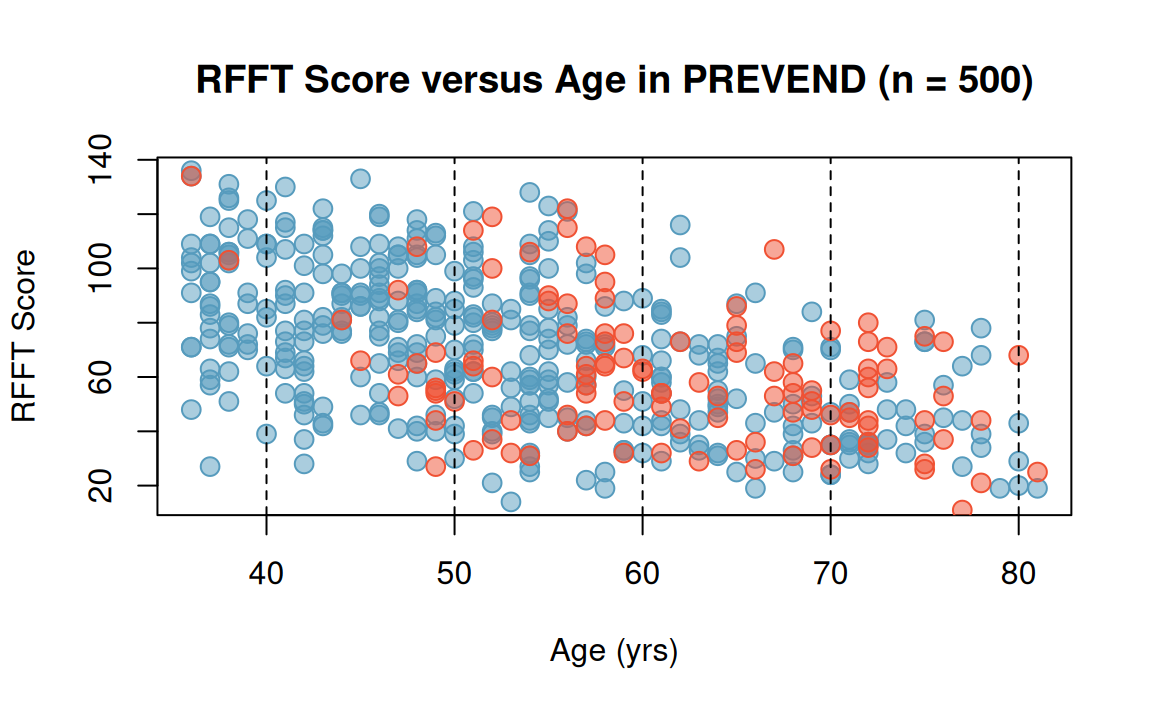

Age, statin use, and RFFT score

Red dots represent statin users; blue dots represent non-users.

Call:

lm(formula = RFFT ~ Statin, data = prevend.samp)

Coefficients:

(Intercept) StatinUser

70.71 -10.05 Interpretation of regression coefficients

Multiple (linear) regression takes the form

Recall the correspondence of the regression equation with

The coefficient

Practically, a coefficient

In action: RFFT vs. statin use and age

Fit the multiple regression with lm():

# fit the linear model

prevend_multreg <- lm(RFFT ~ Statin + Age, data = prevend.samp)

prevend_multreg

Call:

lm(formula = RFFT ~ Statin + Age, data = prevend.samp)

Coefficients:

(Intercept) StatinUser Age

137.8822 0.8509 -1.2710 Assumptions for multiple regression

Analogous to those of simple linear regression…

- Independence: units

- Linearity: for each predictor variable

- Constant variability:

- Normality of residuals:

References

HST 190: Introduction to Biostatistics