Regression: Linear, Logistic, Regularized

August 16, 2025

Categorical predictors

With a binary predictor, linear regression estimates the difference in the mean response between groups defined by the levels.

For example, the equation for linear regression of RFFT score from statin use with the data prevend.samp is

- Mean RFFT score for individuals not using statins is 70.71.

- Mean RFFT score for individuals using statins is 10.05 points lower than non-users,

What about with more than two levels?

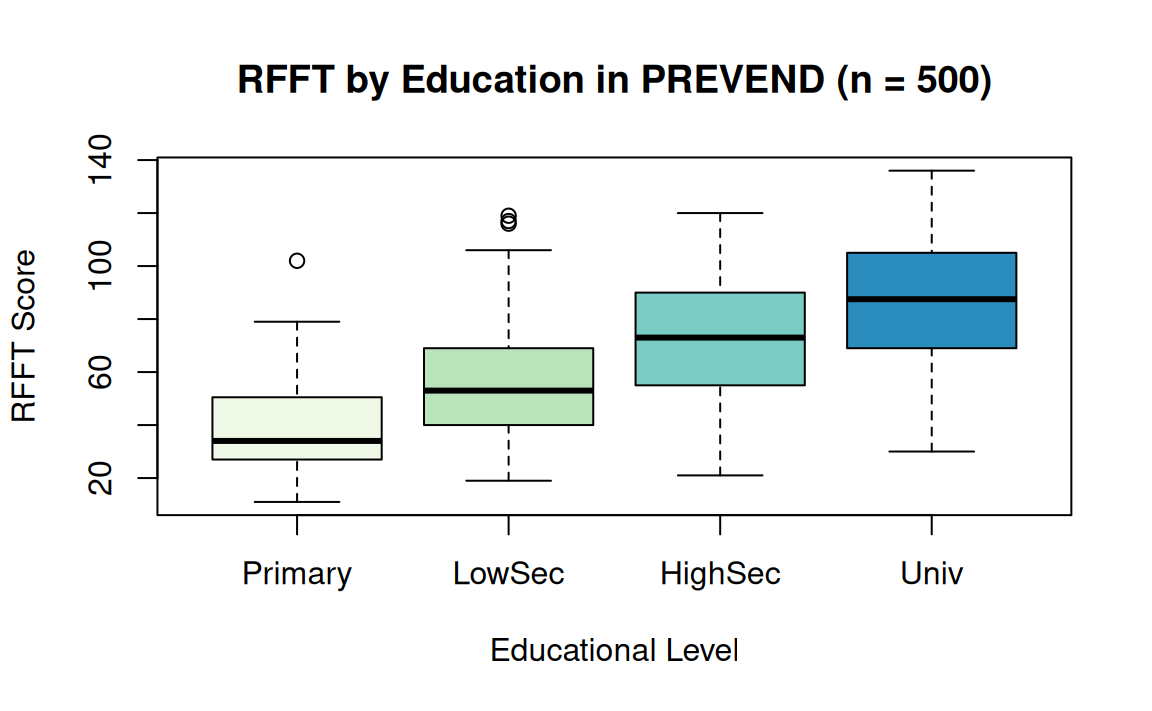

For example: Is RFFT score associated with education?

The variable Education indicates the highest level of education an individual completed in the Dutch educational system:

- 0: primary school

- 1: lower secondary school

- 2: higher secondary education

- 3: university education

Interpreting regression on Education

Baseline category represents individuals who at most completed primary school (Education = 0); Intercept is the sample mean RFFT score for this group.

Coefficients indicates difference in estimated average RFFT relative to baseline group, e.g., predicted increase of 44.96 points for Univ:

| x | |

|---|---|

| (Intercept) | 40.94118 |

| EducationLowerSecond | 14.77857 |

| EducationHigherSecond | 32.13345 |

| EducationUniv | 44.96389 |

| x | |

|---|---|

| Primary | 40.94118 |

| LowerSecond | 55.71975 |

| HigherSecond | 73.07463 |

| Univ | 85.90506 |

Inference for multiple regression

The coefficients of multiple regression

Inference is usually about slope parameters:

Typically, the hypotheses of interest are

Testing hypotheses about slope coefficients

The

Then, a 95% confidence interval for the slope

The

The

There is sufficient evidence to reject

summary(lm()) reports the

Inference: RFFT vs. Education

Call:

lm(formula = RFFT ~ Education, data = prevend.samp)

Residuals:

Min 1Q Median 3Q Max

-55.905 -15.975 -0.905 16.068 63.280

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 40.941 3.203 12.783 < 2e-16 ***

EducationLowerSecond 14.779 3.686 4.009 7.04e-05 ***

EducationHigherSecond 32.133 3.763 8.539 < 2e-16 ***

EducationUniv 44.964 3.684 12.207 < 2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 22.87 on 496 degrees of freedom

Multiple R-squared: 0.3072, Adjusted R-squared: 0.303

F-statistic: 73.3 on 3 and 496 DF, p-value: < 2.2e-16Reanalyzing the PREVEND data

# fit model adjusting for age, edu, cvd

model1 <- lm(RFFT ~ Statin + Age + Education + CVD,

data = prevend.samp)

summary(model1)

Call:

lm(formula = RFFT ~ Statin + Age + Education + CVD, data = prevend.samp)

Residuals:

Min 1Q Median 3Q Max

-56.348 -15.586 -0.136 13.795 63.935

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 99.03507 6.33012 15.645 < 2e-16 ***

Statin 4.69045 2.44802 1.916 0.05594 .

Age -0.92029 0.09041 -10.179 < 2e-16 ***

EducationLowerSecond 10.08831 3.37556 2.989 0.00294 **

EducationHigherSecond 21.30146 3.57768 5.954 4.98e-09 ***

EducationUniv 33.12464 3.54710 9.339 < 2e-16 ***

CVDPresent -7.56655 3.65164 -2.072 0.03878 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 20.71 on 493 degrees of freedom

Multiple R-squared: 0.4355, Adjusted R-squared: 0.4286

F-statistic: 63.38 on 6 and 493 DF, p-value: < 2.2e-16Interaction in regression

When preforming multiple regression,

A statistical interaction describes the case when the impact of an

Cholesterol vs. Age and Diabetes

(Intercept) Age DiabetesYes

4.800011340 0.007491805 -0.317665963

Cholesterol vs. Age and Diabetes

What if two distinct regressions were fit to evaluate total cholesterol and age (varying by diabetic status)?

Cholesterol vs. Age and Diabetes

Adding an interaction term

Consider the model

The term

Significance of an interaction term

Call:

lm(formula = TotChol ~ Age * Diabetes, data = nhanes.samp.adult.500)

Residuals:

Min 1Q Median 3Q Max

-2.3587 -0.7448 -0.0845 0.6307 4.2480

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 4.695703 0.159691 29.405 < 2e-16 ***

Age 0.009638 0.003108 3.101 0.00205 **

DiabetesYes 1.718704 0.763905 2.250 0.02492 *

Age:DiabetesYes -0.033452 0.012272 -2.726 0.00665 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 1.061 on 469 degrees of freedom

(27 observations deleted due to missingness)

Multiple R-squared: 0.03229, Adjusted R-squared: 0.0261

F-statistic: 5.216 on 3 and 469 DF, p-value: 0.001498Interpretation: For those with diabetes, increasing age has a negative (not positive!) association with total cholesterol (

As in simple regression,

As variables are added,

In the summary(lm()) output, Multiple R-squared is

Adjusted

Adjusted

- often used to balance predictive ability and model complexity.

- unlike

Logistic regression

A generalization of methods for two-way tables, allowing for joint association between a binary response and several predictors.

Similar in intent to linear regression, but details differ a bit…

- the response variable is categorical (specifically, binary)

- the model is not estimated via minimizing least squares

- the model coefficients have a (very) different interpretation

Survival to discharge in the ICU

Patients admitted to ICUs are very ill — usually from a serious medical event (e.g., respiratory failure, stroke) or from trauma (e.g., traffic accident).

Question: Can patient features available at admission be used to estimate the probability of survival to hospital discharge?

The icu dataset in the aplore3 package is from a study conducted at Baystate Medical Center in Springfield, MA.

- The dataset contains information about patient characteristics at admission, including heart rate, diagnosis, kidney function, and much more.

- The

stavariable includes labelsDied(0for death before discharge) andLived(1for survival to discharge).

Survival to discharge in the ICU: To CPR or not?

Let’s take a look at how ICU patients fare with CPR:

Prior CPR

Survival No Yes Sum

Died 33 7 40

Lived 154 6 160

Sum 187 13 200- Odds of survival for those who did not receive CPR:

- Probability of survival for those who did not receive CPR:

Odds and probabilities

| Probability | Odds = |

Odds |

|---|---|---|

| 0 | 0/1 = 0 | 0 |

| 1/100 = 0.01 | 1/99 = 0.0101 | 1 : 99 |

| 1/10 = 0.10 | 1/9 = 0.11 | 1 : 9 |

| 1/4 | 1/3 | 1 : 3 |

| 1/3 | 1/2 | 1 : 2 |

| 1/2 | 1 : 1 | |

| 2/3 | 2 : 1 | |

| 3/4 | 3 | 3 : 1 |

| 1 | 1/ |

Logistic regression

Suppose

- In our ICU example:

- Let

The model for a single variable logistic regression is

Logistic regression models the association between the probability

Why log(odds) in regression models?

Since a probability can only take values from 0 to 1, regression can fail, with the predicted response falling outside the interval

- The odds,

- The natural log of the odds (log odds) ranges from

Interpreting the output

Recall that

The logistic regression model’s equation:

The intercept

- estimated odds of survival

- estimated probability of survival

Interpreting the output…

The slope coefficient

The odds of survival in patients who previously received CPR:

Inference for simple logistic regression

As with linear regression, the regression slope captures information about the association between a response and a predictor.

These hypotheses can also be written in terms of the odds ratio.

Logistic regression with multiple predictors

Suppose

With several predictors

Each coefficient estimates the difference in log(odds) for a one unit difference in that variable, for groups in which the other variables are the same.

Survival versus CPR and age

Call:

glm(formula = sta ~ cpr + cre + age, family = binomial(link = "logit"),

data = icu)

Coefficients:

Estimate Std. Error z value Pr(>|z|)

(Intercept) 3.32901 0.74884 4.446 8.77e-06 ***

cprYes -1.69680 0.62145 -2.730 0.00633 **

cre> 2.0 -1.13328 0.70191 -1.615 0.10641

age -0.02814 0.01125 -2.502 0.01235 *

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

(Dispersion parameter for binomial family taken to be 1)

Null deviance: 200.16 on 199 degrees of freedom

Residual deviance: 181.47 on 196 degrees of freedom

AIC: 189.47

Number of Fisher Scoring iterations: 5Inference for multiple logistic regression

Typically, the hypotheses of interest are

These hypotheses can also be written in terms of the odds ratio.

Regression recap

We considered

- When fitting the regression model, we used ordinary least squares to minimize the sum of squared residuals:

- This doesn’t work when the number or predictors exceeds the number of observations, i.e.,

- The case

Regularized regression

- In this high-dimensional setting, how do we choose the relevant predictors to fit the best model that we can?

- A penalized or regularized regression procedure changes the quantity we minimize to discourage choosing too many variables.

- Force estimates of

- Force estimates of

- Regularized regression is a general family of procedures involving different forms of penalization, including lasso, ridge, and elastic net.

Regularized regression: Ridge

The ridge (or Tikhonov) regularized regression estimator minimizes a sum of squares subject to an

- The penalty (degree of regularization) is set by the tuning parameter

- As

- The choice of

Regularized regression: Lasso

The lasso regularized regression estimator minimizes a sum of squares subject to an

- Again, the penalty (degree of regularization) is controlled by the tuning parameter

- As

- There are many variations to the lasso (group lasso, fused lasso, etc.), and it is a popular variable selection strategy.

Regularized regression: Elastic net

The elastic net regularized regression estimator combines the ridge and lasso penalties. It is defined as

- Note the correspondence to ridge regression (when

- The elastic net overcomes some pitfalls of the lasso, including that the lasso can fail to isolate a best predictor from among a group of related predictors, with the

Generalization in regression

Regression methods borrow information across

- How do we choose

- Borrowing (or smoothing) across all

- borrowing too much across

- borrowing too little across

- the goal is to find the right balance: bias-variance trade-off

- borrowing too much across

- Can we use the available data to make an optimal choice of

Generalization in regression

What if we evaluate a large collection (e.g., in

- we can still entirely pre-specify the whole estimation procedure

- …but we need to determine how to pick the best technique

Can we run a fair competition between estimators to determine the best? If so, how would we go about doing this?

Cross-validation and generalization

How can we estimate the risk of an estimator?

Idea: Use empirical risk estimate

Problem:

Cross-validation and generalization

By analogy: A student got a glimpse of the exam before taking it.

- focused on learning test questions very well…but

- test result doesn’t reflect how well student learned the subject

With

Cross-validation and generalization

When we use the same data to fit the regression function and to evaluate it:

- we are overly optimistic about how well each estimator is fit;

- so, we would select the wrong estimator.

What we need is external data to properly evaluate estimators.

- We could get a better idea of how well estimators are doing.

- We could objectively select the correct estimator to use.

- …but such data are rarely available.

Cross-validation

Instead, we use cross-validation to estimate the risk.

- Fit regression on part of the data, evaluate on another part.

- Mimics the evaluation of the given fit on external data.

- Many forms of cross-validation1:

Cross-validation

Data are divided into

- Training sample is used to fit (“train”, “learn”) the regressions.

- Validation sample is used to estimate risk (“validate”, “test”, “evaluate”).

Several factors to consider when choosing

- large

- small

HST 190: Introduction to Biostatistics